Lead Institution: IST-ID

Research Unit: ISR-Lisboa

Ref.: 2024.14126.UTA

https://doi.org/10.54499/2024.14126.UTA

This project will leverage LLMs in various roles to improve robotics, aiming to harness their full potential within practical general-purpose deployment scenarios and leveraging their advanced reasoning capabilities for the effective planning of complex tasks.

Robotics AI architectures process sensory input into models that can be interpreted by planning software for the purpose of generating lists of low-level tasks for robots to carry out. They then provide methods for interpreting the plan, mapping these tasks onto functionality implemented on the robot. Large Language Models (LLMs) are well-known for their ability to interpret and output human-like natural language and hold the potential for seamless human interaction. Recently, LLMs have been used for robotics because – like planning software – they can be used to decompose complex tasks into lists of simpler tasks. LLMs can, for instance, take a statement like, “clean the table,” and turn that into a list of instructions. The FOMO-HODOR project will advance robotic task planning with LLMs, aiming at the development of a computational framework for large-model-based robot control capable of addressing tasks of long duration and elevated complexity.

Specifically, the project considers two research tasks:

- improving the use of LLMs in robot task planning, by decomposing high-level tasking into steps to be executed; and

- addressing the engineering challenges of integrating LLMs with components of a robot’s AI architecture, with the aim of enabling effective interactions with human users, and targeting participation in RoboCup@Home, i.e., a competition where robots perform domestic tasks.

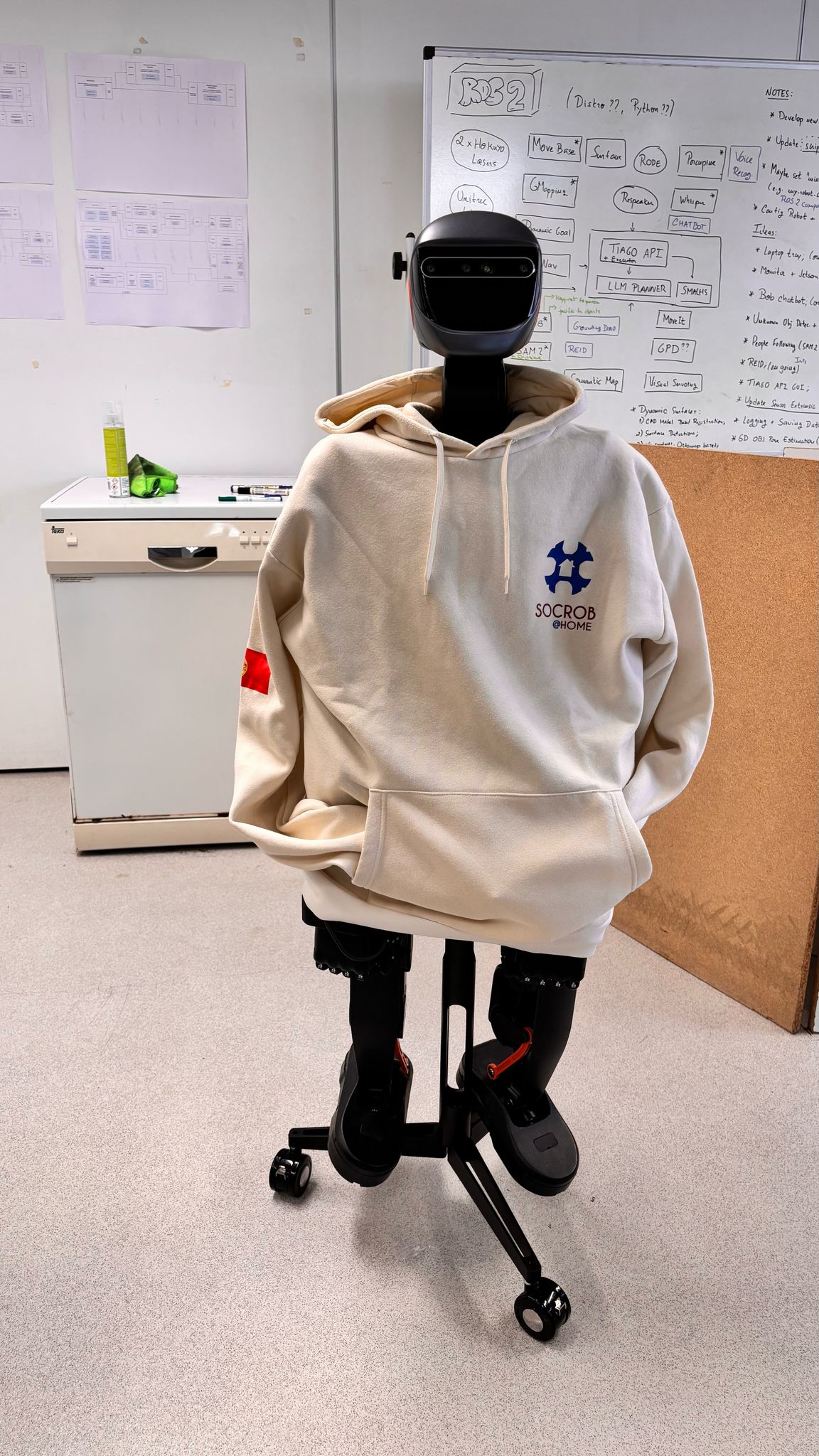

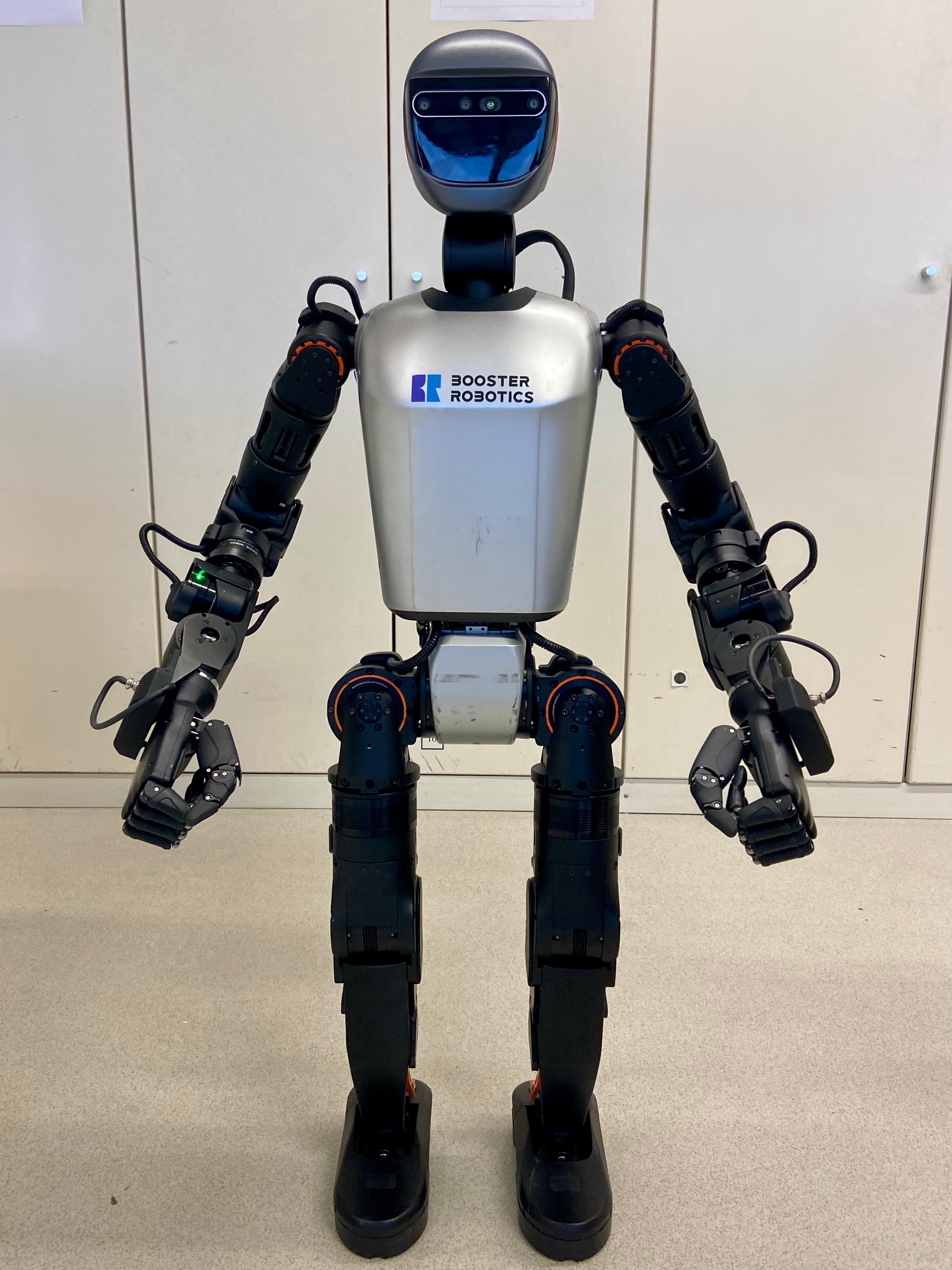

Within the competition, there is a task called General-Purpose Service Robot (GPSR), in which human operators speak commands to the robot. The teams collaborating on this proposal have extensive experience with RoboCup@Home and GPSR. We propose to develop a framework that leverages software developed by both teams and the development within both tasks, to run on a new humanoid robot platform, the Booster T1.

There are significant research challenges that must be met to achieve reliable and contextually aware task planning for robots. Prior attempts at LLM integrations on robots have been relatively simplistic. This project seeks to better integrate the technology into a more cohesive robotics architecture, aiming at a FOundation MOdel for HumanOid DOmestic RObots (FOMO-HODOR).

The impact of the envisioned framework can be substantial. LLMs have not yet been well-integrated into robotics AI architectures, with existing work mostly using them as a substitute for the current state-of-the-art methods. Unfortunately, LLMs are also missing many of the strengths of the state of the art in robotics. Developing this framework will allow it to be transitioned to a variety of robots, performing a variety of tasks, and is likely to have far-reaching impacts for broader and more rapid deployment of robot technologies in real-world scenarios.

Partners

Institute for Systems and Robotics (ISR-Lisboa), from Instituto Superior Técnico (Lisbon, Portugal) is a university-based R&D institution where multidisciplinary advanced research activities are developed in the areas of Robotics and Information Processing, including Systems and Control Theory, Signal Processing, Computer Vision, Optimization, AI and Intelligent Systems, Biomedical Engineering. Applications include Autonomous Land, Marine, Aerial and Space Robotics, medical systems, smart cities social robots and cognitive robots.

ISR-Lisboa’s Mission includes developing advanced research and providing advanced training at the MSc and phD levels.

Researchers from the Intelligent Robots and Systems group (IRSg) will be participating in the project. IRSg focuses on a holistic view of robotic systems, and has a long track record of robot competitions for research and benchmarking in robotics.

INESC-ID, Instituto de Engenharia de Sistemas e Computadores, Investigação e Desenvolvimento em Lisboa, is an institution dedicated to R&D in the fields of information technology, electronics and telecommunications. The main objectives of INESC-ID are to integrate competences from researchers in electrical engineering and computer science to advance the state of the art in computers, telecommunications, and information systems and to perform technology transfer, to support the creation of technology-based startups, and to provide technical support, also through basic research, applied research and advanced education.

Researchers from the Human Language Technologies (HLT) group of INESC-ID will be participating in the project. The group is actively involved in research activities related to natural language processing and speech processing, and it has a strong track record in the participation/coordination of both national and international projects. The HLT group also has access to a large computational infrastructure featuring servers with NVIDIA GPUs, which are required for the activities that are envisioned in the project.

The University of Texas at Austin (UT Austin) is a public research university and the flagship institution of the University of Texas System, founded in 1883. It is located in Austin, Texas, and is a leading national and global university with high rankings in various fields like computer science, energy, and entrepreneurship. The university serves over 50,000 students and has a mission to advance knowledge and provide educational opportunities.

The research at UT Austin associated with this project will occur within the Bill & Melinda Gates Computer Science Building, a 230,000 square foot building consisting of two wings and an expansive connecting atrium; as well as in the newly renovated Anna Hiss Gym, a historic building that has been converted into a large, collaborative robotics facility, featuring lots of open space and high ceilings.

The researchers have designated space in these buildings with access to many high end PCs and laptops, as well as communal high performance computing facilities. There is also a mock apartment space that is ideal for development and testing of general purpose service robot capabilities.

People

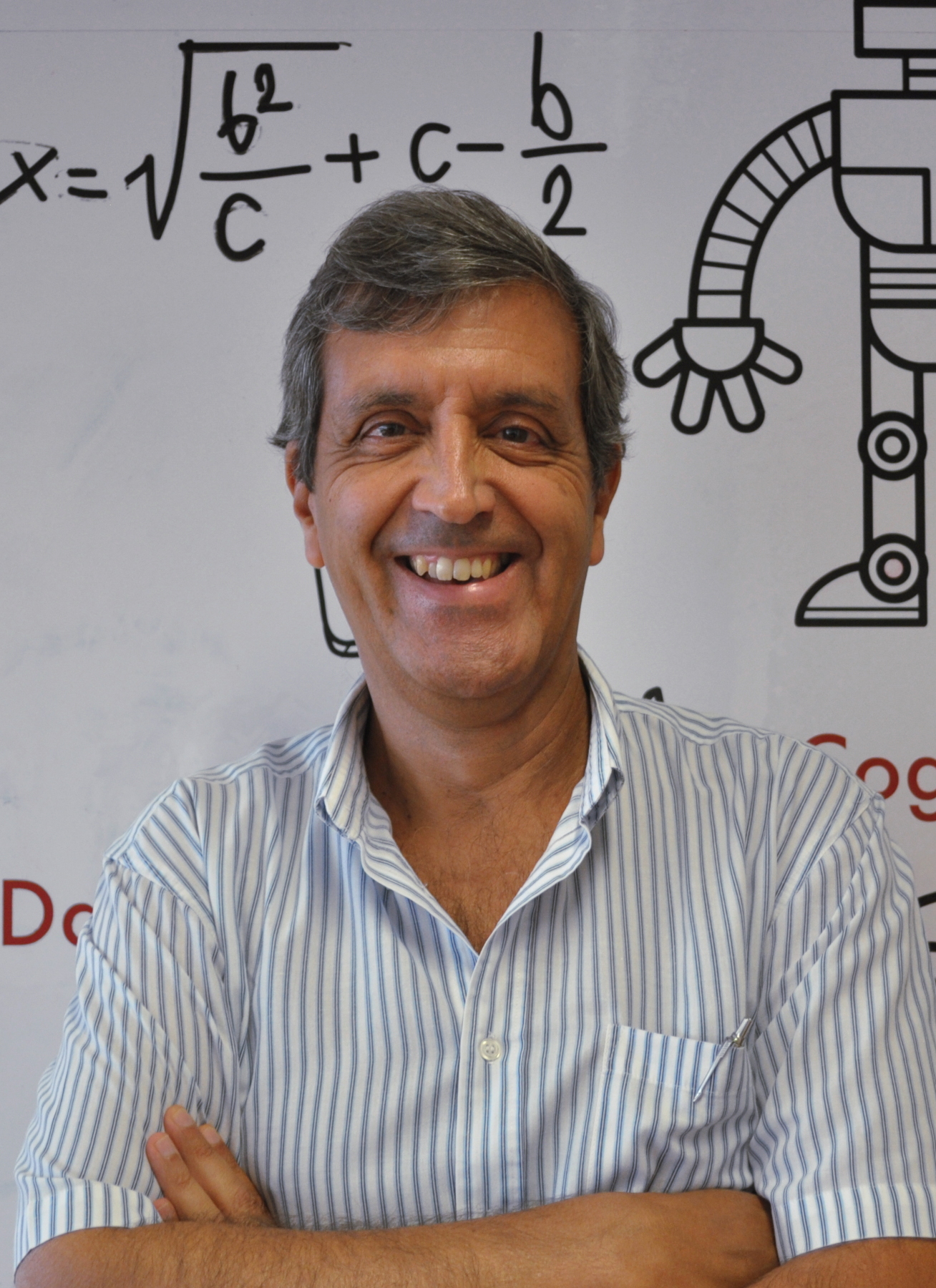

Pedro Lima

Peter Stone

Bruno Martins

Justin Hart

Afonso Certo

Raghav Arora

Yongwoo Kim

Lingyun Xiao

Rodrigo Coimbra

Duarte Santos

Robot Description

LOLA, also known as Booster T1, is a lightweight, mobile service robot ideal for indoor domestic environments. She features enhanced onboard computing for AI workloads, a depth camera for perception, and built-in audio for interaction. LOLA supports rapid experimentation and development of high-level robotic behaviors for service-oriented tasks.

Hardware

- Height of the robot: 1180 mm

- Weight of the robot: 30 kg (without fingered hands)

- Walking Speed: 0.8 m/s

- Number of degrees of freedom: 23

- 2 InspireRobots RH56DFX 6 DoF hands

- Type of motors: Booster Robotics Integrated Joints with Max Torque

7Nm,36Nm,60Nm,90Nm,130Nm. - Sensors:

- Camera, RealSense D455 with IR disabled;

- Gyro, 9-Axis IMU

- Control unit: Intel i7 1370P

- Perception and Decision Unit: NVIDIA AGX Orin 32GB

- Materials: 7075 Aluminum Alloy and Engineering Plastics

- Battery: 13S3P 10.5Ah Ternary lithium battery

Objectives

The project is organized into two separate technical tasks and will lead to the participation of a joint team in RoboCup@Home 2026.

T1 – Foundation Models for Robot Action Planning

Task 1 aims to leverage LLMs to connect natural language to the robot’s internal representations. State-of-the-art robotics architectures use software called a “planner” to plan the robot’s actions. The shortcoming of planner-based architectures is the need to encode every possible configuration of the

world or task to be performed into the planner. If something new is requested, it must be encoded into the planner. We will use LLMs to translate natural language commands into lists of actions expressed in natural language (e.g., so that the robot can also communicate with the user the actions that will be performed) and, from this intermediate representation, into a formal language (such as that used by a planner) describing the execution plan. The actions are either robot actions, to be executed in the physical world, or update and retrieval actions to interact with a working memory. Research within this task will look into aspects such as how can high-level actions be refined or verified by a classical planner, how can LLMs be prompted or fine-tuned to fuse user instructions with other sensor information, and how to represent the robot’s working memory and action knowledge.

T2 – Foundation Model Interaction with Other Components

Task 2 will address the engineering challenges involved in integrating LLMs with robots, with the aim of enabling effective interactions with human users. These challenges encompass the support for seamless and robust interactions through natural language, the combined use of information from the robot’s sensors and from the working memory, and also all the technical considerations associated with deploying LLMs, either onboard the robot or remotely via external computational resources. LLMs have demonstrated the ability to emulate the inference of human intentions, but augmenting LLM inputs with richer descriptions of the environment in which the robot operates and additional information about the operator’s behavior will take better advantage of these capabilities. Task 2 will also involve important research challenges, e.g., related to the adaptation of speech recognition and synthesis components for this specific application or related to the use of information derived from vision models within LLMs.

LisTex United

This is the joint ISR-Lisboa/IST UT Austin joint team that will compete in RoboCup@Home 2026.

Qualification video: https://youtu.be/1foYfvtJSu4

Media

Besides the usual reporting in technical projects, we plan to:

- Disseminate regularly our progress here and on social media

- Participate in RoboCup@Home 2026

- Organize a final workshop in September 2026 to disseminate the project results

Publications

[1] Afonso Certo, Bruno Martins, Carlos Azevedo, Pedro Lima “Large Language Model-Based Robot Task Planning from Voice Command Transcriptions”, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2025), 2025

[2] Bo Liu, Yuqian Jiang, Xiaohan Zhang, Qiang Liu, Shiqi Zhang, Joydeep Biswas, and Peter Stone. Llm+p: Empowering large language models with optimal planning proficiency,arXiv preprint arXiv:2304.11477, 2023.

Contacts

Pedro U. Lima

pedro.lima (at) tecnico.ulisboa.pt

+351 21 841 8289

Torre Norte, Av. Rovisco Pais 1, 1049-001 Lisboa