Funding

FRARIM: Human-robot interaction with field robots using augmented reality and interactive mapping

PTDC/EIA-CCO/113257/2009

LARSyS Plurianual Budget

PEst-OE/EEI/LA0009/2011

Field robotics is the use of sturdy robots in unstructured environments. One important example of such a scenario is in Search And Rescue (SAR) operations to seek out victims of catastrophic events in urban environments. While advances in this domain have the potential to save human lives, many challenging problems still hinder the deployment of SAR robots in real situations. This project tackles one such crucial issue: effective real time mapping. To address this problem, we adopt a multidisciplinary approach by drawing on both Robotics and Human Computer Interaction (HCI) techniques and methodologies.

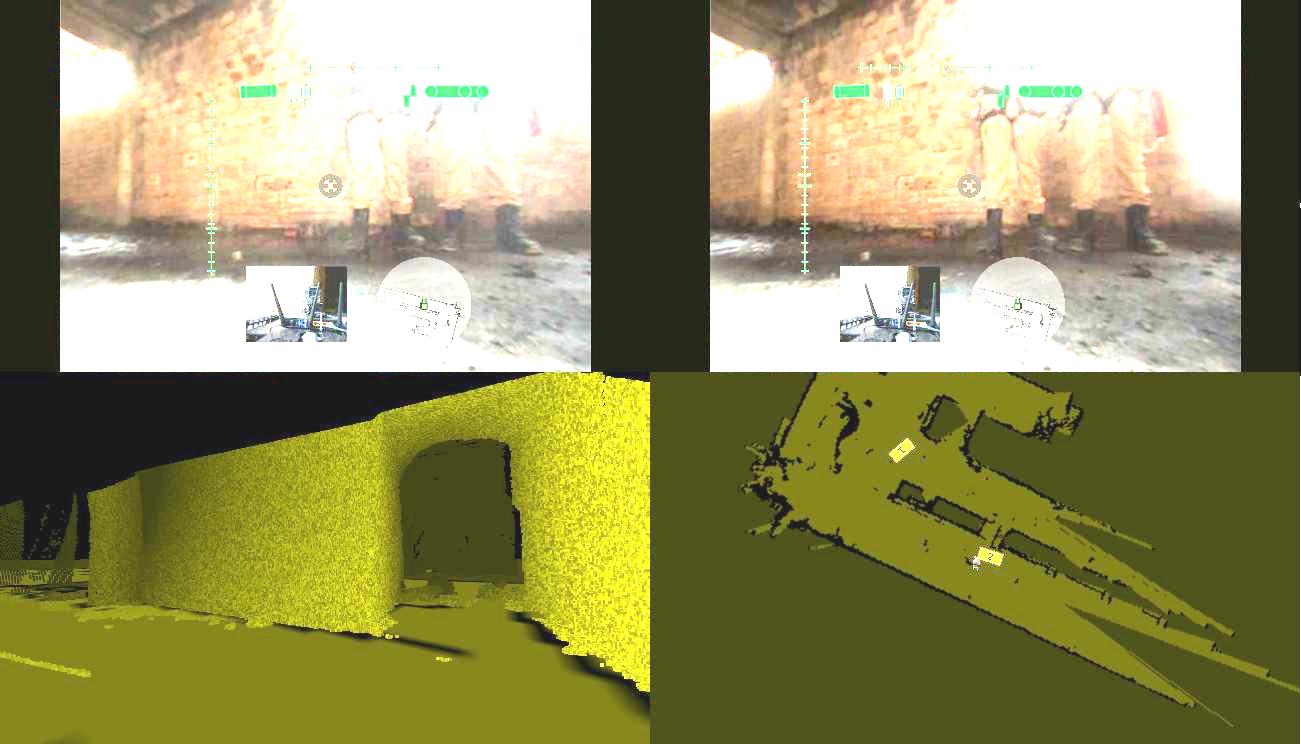

To achieve effective human-robot interaction (HRI), we propose presenting a pair of stereo camera feeds from a robot to an operator through an Augmented Reality (AR) head-mounted display (HMD). This will provide an immersive experience to the controller and has the capacity to display rich contextual information. To further enhance this display, we will superimpose mapping data generated by SLAM-6D (Simultaneous Localization And Mapping) algorithms over the video input using Augmented Reality (AR) techniques. Three modes of display will be available: (1) a top view 2D map, to aid navigation and provide an overview of spaces, (2) a rendered 3D model to allow the operator to explore a virtual model of the environment in detail, and (3) a superimposed rendered 3D model, synchronized with the field of view of the operator to form an augmented camera view. To achieve this latter mode we will track the angular position of the HMD and use it to both control the orientation of the robot’s cameras and the rendering of the AR scene.

We will use real firefighter training camps as an experimental setup, leveraging a prior relationship between IST/ISR and the Lisbon firefighter corporation. The cooperation with firefighter teams will also provide subjects for initial task analyses and later usability evaluations of the system in the field. Concerning the robotic platforms, we will use existing robotic platforms at IST/ISR (e.g., the RAPOSA robot, together with other commercial platforms), upgrading them with the necessary equipment to achieve the project goals: 3D ranging sensors, calibrated stereo camera pairs with a pan&tilt mounting, and increased computing power.

Partners

Institute for Systems and Robotics (IST/ISR), from Instituto Superior Técnico (Lisbon, Portugal) is a university based R&D institution where multidisciplinary advanced research activities are developed in the areas of Robotics and Information Processing, including Systems and Control Theory, Signal Processing, Computer Vision, Optimization, AI and Intelligent Systems, Biomedical Engineering. Applications include Autonomous Ocean Robotics, Search and Rescue, Mobile Communications, Multimedia, Satellite Formation, Robotic Aids.

The work of Madeira Interactive Technologies Institute (M-ITI) (Madeira, Portugal) mainly concentrates on innovation in the areas of computer science, human-computer interaction, and entertainment technology. Feel welcome to explore the information here on our educational programs and our research efforts.

People

Rodrigo Ventura

Ian Oakley

Alexandre Bernardino

José Gaspar

José Corujeira

Filipe Jesus

Pedro Vieira

João Mendes

João O'Neill

José Reis

Robot description

Following the success of RAPOSA, the IdMind company developed a commercial version of RAPOSA, improving it in various ways. Notably, the rigid chassis of RAPOSA, which eventually ends up being plastically deformed by frequent shocks, was replaced by semi-flexible structure, capable of absorbing non-elastical shocks, while significantly lighter than the original RAPOSA.

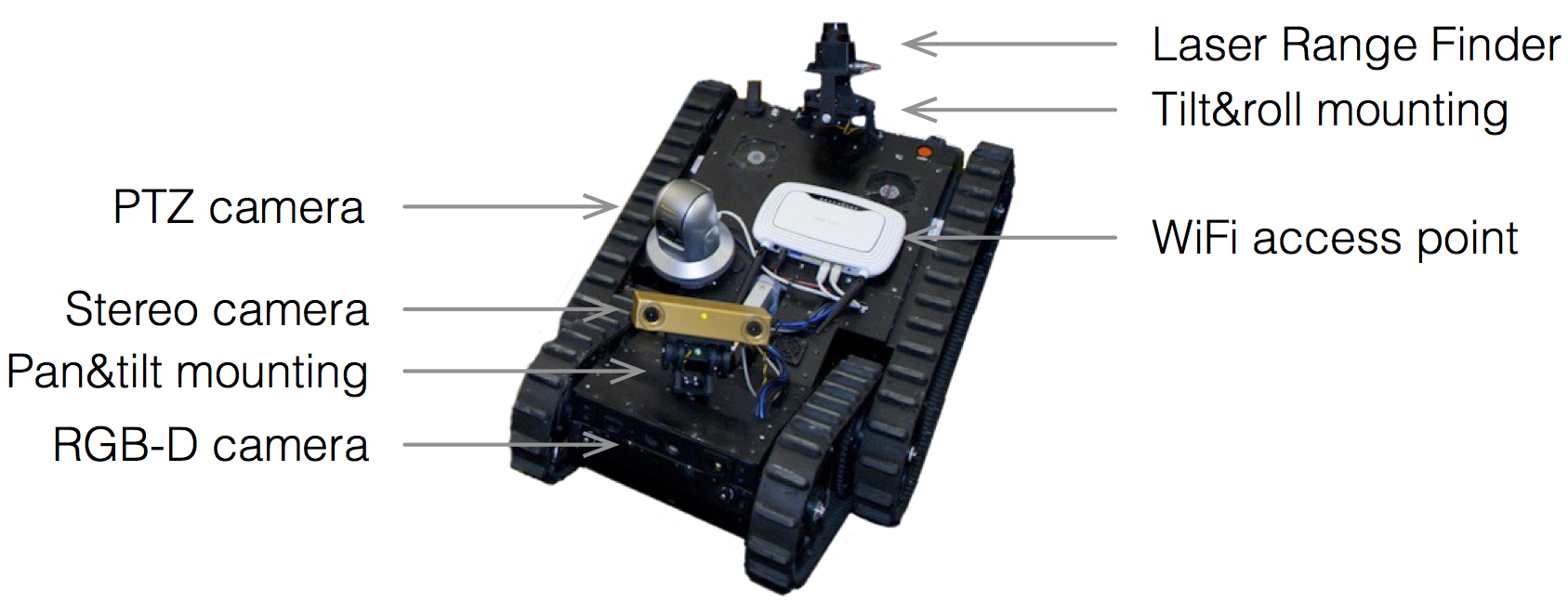

ISR acquired a barebones version of this robot, called RAPOSA-NG, and equipped it with a different set of sensors, following lessons learnt from previous research with RAPOSA. In particular, it is equipped with:

- a stereo camera unit (PointGrey Bumblebee2) on a pan-and-tilt motorized mounting;

- a Laser-Range Finder (LRF) sensor on a tilt-and-roll motorized mounting;

- an pan-tilt-and-zoom (PTZ) IP camera;

- an Inertial Measurement Unit (IMU).

This equipment was chosen not only to fit better our research interests, but also to aim at the RoboCup Robot Rescue competition. The stereo camera is primarily used jointly with an Head-Mounted Display (HMD) wear by the operator: the stereo images are displayed on the HMD, thus providing depth perception to the operator, while the stereo camera attitude is controlled by the head tracker built-in the HMD. The LRF is being used in one of the following two modes: 2D and 3D mapping. In 2D mapping we assume that the environment is made of vertical walls. However, since we cannot assume horizontal ground, we use a tilt-and-roll motorized mounting to automatically compensate for the robot attitude, such that the LRF scanning plane remains horizontal. An internal IMU measures the attitude of the robot body and controls the mounting servos such that the LRF scanning plane remains horizontal. The IP camera is used for detail inspection: its GUI allows the operator to orient the camera towards a target area and zoom in into a small area of the environment. This is particularly relevant for remote inspection tasks in USAR. The IMU is used both to provide the remote operator with reading of the attitude of the robot, and for automatic localization and mapping of the robot.

Further info can be found in the book chapter Two Faces of Human–Robot Interaction: Field and Service Robots (Rodrigo Ventura), from New Trends in Medical and Service Robots Mechanisms and Machine Science Volume 20, pp 177-192, SprinGer, 2014.

Media

The following Youtube playlist contains four videos showing the participation of our team in several scenarios:

- field exercise in a joint operation with the GIPS/GNR Search and Rescue team (2014)

- ANAFS/GREM-2013 Search and Rescue exercise, showing a joint operation with GIPS/GNR (2013)

- Robot Rescue League of the international scientific event RoboCup in Eindhoven, NL (2013)

- Robot Rescue League of the international scientific event RoboCup German Open in Magdeburg, DE (2012)

Publications

- Henrique Martins, I. Oakley , Rodrigo Ventura, Design and evaluation of a head-mounted display for immersive 3-D teleoperation of field robots, Robotica, Cambridge University Press, 2014

- Rodrigo Ventura, Two Faces of Human–Robot Interaction: Field and Service Robots, in New Trends on Medical and Service Robots: Challenges and Solutions, Mechanisms and Machine Science, Springer, Vol. 20, pp. 177-192 , 2014

- Rodrigo Ventura, P. Vieira , Interactive mapping using range sensor data under localization uncertainty, Journal of Automation, Mobile Robotics & Intelligent Systems, 6(1):4753, 2013

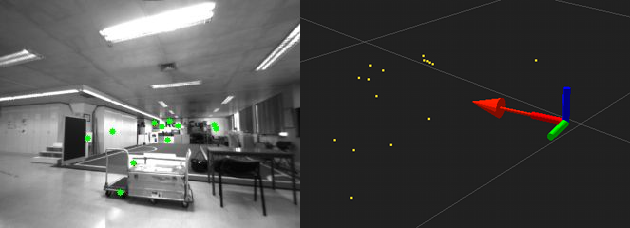

- Rodrigo Ventura, F. Jesus , Simultaneous localization and mapping for tracked wheel robots combining monocular and stereo vision, Journal of Automation, Mobile Robotics & Intelligent Systems, 6(1):2127, 2013

- Pedro Vieira, Rodrigo Ventura, Interactive 3D Scan-Matching Using RGB-D Data, Proc. of ETFA – 17th IEEE International Conference on Emerging Technologies & Factory Automation, Krakow, Poland, 2012

- F. Jesus , Rodrigo Ventura, Combining monocular and stereo vision in 6D-SLAM for the localization of a tracked wheel robot, Proc. of SSRR 2012 – 10th IEEE International Symposium on Safety, Security, and Rescue Robotics, College Station, TX, USA, 2012

- P. Vieira , Rodrigo Ventura, Interactive mapping in 3D using RGB-D data, Proc. of SSRR 2012 – 10th IEEE International Symposium on Safety, Security, and Rescue Robotics, College Station, TX, USA, 2012

- J. Reis , Rodrigo Ventura, Immersive robot teleoperation using an hybrid virtual and real stereo camera attitude control, Proc. of RecPad 2012 – 18th Portuguese Conference on Pattern Recognition, Coimbra, Portugal, 2012

- Rodrigo Ventura, Pedro Lima, Search and rescue robots: The civil protection teams of the future, Proc. of EST 2012 – 3rd International Conference on Emerging Security Technologies, pp. 1219, Lisbon, Portugal, 2012

Contacts

Rodrigo Ventura

rodrigo.ventura (at) isr.tecnico.ulisboa.pt

+351 21 841 8289

Torre Norte, Av. Rovisco Pais 1, 1049-001 Lisboa