Cognitive Architectures

In the engineering of machine intelligence one cannot disregard natural inteligence that humans, together with other animals, display. Intelligence is here understood in the broad sense of behaving appropriately situated in an environment, as in the case of a fight-or-flight response for securing survival, as well as in the case of a mathematician proving a theorem. In these situations, hard to cast as a neat optimization problem, scientists often look into biology for inspiration. What biological evolution solved before has the potential for, at very least, provide guidance on how to approach the problem.

Research in this area is focused on

- decision-making, namely in the interplay between emotion mechanisms and rationality,

- robotic platforms (e.g., humanoid robots) situated in a physical environment, socially interacting with humans, other robots, and objects in the environment.

- functional architectures, namely those for cognitive robots.

Research activities

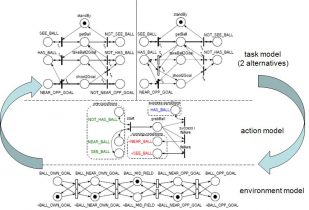

Interest on cognitive architectures had its origin on previous research carried out by the group on emotion-based agent models. Neuroscientific findings on the role of emotion mechanisms in the brain to decision-making processes have driven this research towards the construction and experimental testing of agent models taking those aspects into account.

The interest is also linked to the long connection with the work on functional architectures for intelligent robots, with inspiration in works such as the NIST RCS Architecture by James Albus.

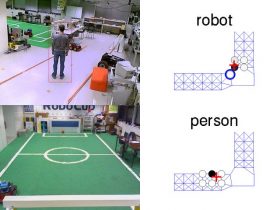

As ISR is one of the partners of the RobotCub EU project, research has been conducted together with VisLab using the humanoid robot being developed in this project: the iCub. The anthropomorphic design of this platform makes it an interesting platform for developing cognitive architectures. In particular, a developmental approach has been taken, meaning that cognitive capabilities are being built on top of more basic ones, on a modular fashion.

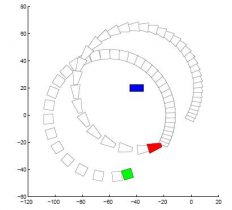

The IRSg did some work on endowing the iCub cognitive architecture with a spatial model of the environment. This model is built on top a basic attention system, driven by perceptual features. At the current stage of this research, the iCub is capable of learning new objects, once they are shown to it close to his “eyes”. Whenever learnt objects are found on the environment, iCub gazes at them, sequentially.

In the SocRob internal project, we have also been developing for years functional and software architectures for multi-robot systems and single-robot systems interacting with humans at home, where the science of integration is crucial to develop operational systems.

References

- Alexandre Antunes, Lorenzo Jamone, Giovanni Saponaro, Alexandre Bernardino, Rodrigo Ventura, From Human Instructions to Robot Actions: Formulation of Goals, Affordances and Probabilistic Planning, Proc. of ICRA 2016 – IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 2016

- Lorenzo Jamone, Giovanni Saponaro, Alexandre Antunes, Rodrigo Ventura, Alexandre Bernardino, José Santos-Victor, Learning object affordances for tool use and problem solving in cognitive robots, AIRO 2015 – 2nd Italian Workshop on Artificial Intelligence and Robotics, Ferrara, Italy, 2015

- Bruno Nery, Rodrigo Ventura, A dynamical systems approach to online event segmentation in cognitive robotics, Paladyn. Journal of Behavioral Robotics, 2(1):1824, March, 2011

- Dario Figueira, Manuel Lopes, Rodrigo Ventura, Jonas Ruesch, “From Pixels to Objects: Enabling a spatial model for humanoid social robots”, accepted for publication on ICRA-09: IEEE International Conference on Robotics and Automation, Kobe, Japan 2009 — PDF

- Pedro Lima, Carlos Azevedo, Emilia Brzozowska, João Cartucho, Tiago Dias, Joao Goncalves, Mithun Kinarullathil, Guilherme Lawless, Oscar Lima, Rute Luz, Pedro Miraldo, Enrico Piazza, Miguel Silva, Tiago Veiga, Rodrigo Ventura, “SocRob@Home: Integrating AI Components in a Domestic Robot System”, KI – Künstliche Intelligenz (2019), 2019